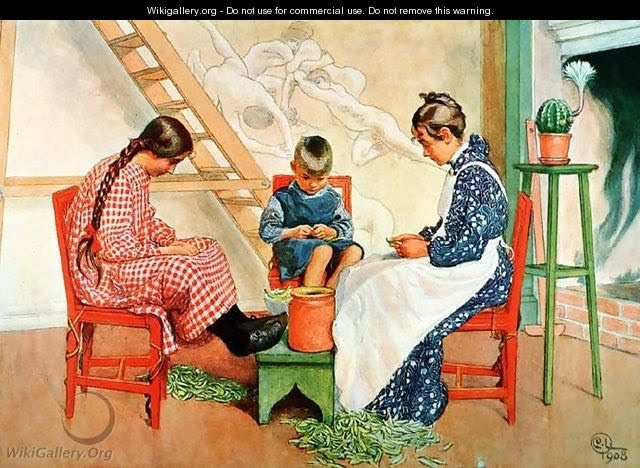

I love this painting by Carl Larsson. Here is a domestic scene of a mother and two children shelling fresh peas into an earthenware pot, pods heaping up on the floor. They are immersed in their work in companionable silence. They can anticipate a tasty seasonal meal. This is not opening a bag of frozen peas and boiling it for a few minutes. This is slow food, savoured for its own sake. The slow food movement, according to Wikipedia, started in the 1980s as a protest to resist the spread of fast food. It rapidly spread with the aim to promote local foods and traditional gastronomy. In August 2012 in Aarhus I first hit on the idea of slow science. Just as with food production slowness can be a virtue: it can be a way to improve quality and resist quantity.

I had to give a short speech to celebrate the end of the Interacting Minds Project and the launch of the Interacting Minds Centre. I was looking back on the preceding 5 years and wondered whether the Project would have been predicted to succeed or to fail. I found that there had been far more reason to predict failure than success. One reason was that procedures for getting started were very slow, so slow – that they made us alternately laugh and throw up our hands in disbelief.

I had to give a short speech to celebrate the end of the Interacting Minds Project and the launch of the Interacting Minds Centre. I was looking back on the preceding 5 years and wondered whether the Project would have been predicted to succeed or to fail. I found that there had been far more reason to predict failure than success. One reason was that procedures for getting started were very slow, so slow – that they made us alternately laugh and throw up our hands in disbelief.

But, what if the success of the Project was not despite the slowness, but because of it? Chris and I had been plunged from the fast moving competitive UCL environment in London into a completely different intellectual environment. This was an environment where curiosity driven research was encouraged and competition did not count for much. After coming to Denmark for some extended stays and for at least one month every year since 2007 we have been converted. We are almost in awe of slowness now. We celebrate Slow Science.

Can Slow Science be an alternative to the prevalent culture of publish or perish?Modern life has put time pressure on scientists, particularly in the way they communicate: e-mail, phones and cheap travel have made communication almost instant. I still sometimes marvel at the ease of typing and editing papers with search, delete, replace, copy and paste. Even more astonishing is the speed of searching libraries and performing data analysis. What effect have these changes in work habits had on our thinking habits?

Slow Food and Slow Science is not slowing down for its own sake, but increasing quality. Slow science means getting into the nitty gritty, just as the podding of fresh peas with your fingers is part of the production of a high quality meal. Science is a slow, steady, methodical process, and scientists should not be expected to provide quick fixes for society’s problems.

I tweeted these questions and soon got a response from Jonas Obleser who sent me the manifesto of slowscience.org from 2010. He had already put into words what I had been vaguely thinking about.

Science needs time to think. Science needs time to read, and time to fail. Science does not always know what it might be at right now. Science develops unsteadily, with jerky moves and unpredictable leaps forward—at the same time, however, it creeps about on a very slow time scale, for which there must be room and to which justice must be done.

Slow science was pretty much the only science conceivable for hundreds of years; today, we argue, it deserves revival and needs protection. … We do need time to think. We do need time to digest. We do need time to misunderstand each other, especially when fostering lost dialogue between humanities and natural sciences. We cannot continuously tell you what our science means; what it will be good for; because we simply don’t know yet.

These ideas have resurfaced again and again. Science journalist John Horgan posted this on his blog in 2011 “The Slow Science Movement Must be Crushed” with the punch line that if Slow Science caught on, and scientists started publishing only high quality data that have been double- and triple-checked, then he would have nothing to write about anymore.

Does science sometimes move too fast for own good? Or anyone’s good? Do scientists, in their eagerness for fame, fortune, promotions and tenure, rush results into print? Tout them too aggressively? Do they make mistakes? Exaggerate? Cut corners? Even commit outright fraud? Do journals publish articles that should have been buried? Do journalists like me too often trumpet flimsy findings? Yes, yes, yes. Absolutely.

I liked this, but not much more was discussed on blogs until it came to the more recent so-called replication crisis. I wonder if it possibly has converted some more scientists to Slow Science. Earlier this year, Dynamic Ecology Blog had a post “In praise of slow science” and attracted many comments:

It’s a rush rush world out there. We expect to be able to talk (or text) anybody anytime anywhere. When we order something from half a continent away we expect it on our doorstep in a day or two. We’re even walking faster than we used to.

Science is no exception. The number of papers being published is still growing exponentially at a rate of over 5% per year (i.e. doubling every 10 years or so). Statistics on growth in number of scientists are harder to come by … but it appears …the individual rate of publication (papers/year) is going up.

There has been much unease about salami slicing to create as many papers as possible; about publishing ephemeral results in journals with scanty peer review. Clearly if we want to improve quality, there are some hard questions to be answered:

How do we judge quality science? Everyone believes their science is of high quality. It’s like judging works of art. But deep down we know that some pieces of our research are just better than others. What are the hallmarks? More pain? More critical mass of data? Perhaps you yourself are the best judge of what are your best papers. In some competitive schemes you are required to submit or defend only your best three/four/five papers. This is a good way of making comparisons fairer between candidates who may have had a different career paths and shown different productivity. More is not always better.

How to improve quality in science? That’s an impossible question, especially if we can’t measure quality, and if quality may become apparent only years later. Even if there was an answer, it would have to be different for different people, and different subjects. Science is an ongoing process of reinvention. Some have suggested that it is necessary to create the right social environment to incubate new ideas and approaches, the right mix of people talking to each other. When new tender plants are to be grown, a whole greenhouse has to be there for them to thrive in. Patience is required when there is unrelenting focus on methodological excellence.

Who would benefit? Three types of scientists: First, scientists who are tending the new shoots and have to exercise patience. These are people with new ideas in new subjects. These ideas often fall between disciplines but might eventually crystallising into a discipline of their own. In this case getting grants and getting papers published in traditional journals is difficult and takes longer. Second, scientists who have to take time out for good reasons, often temporarily. If they are put under pressure, they are tempted to write up preliminary studies, and by salami slicing bigger studies. Third, fast science is a barrier for scientists who have succeeded against the odds, suffering from neuro-developmental disorders, such as dyslexia, autism spectrum disorder, or ADHD. It is well known that they need extra time for the reviewing and writing-up part of research. The extra time can reveal a totally hidden brilliance and originality that might be otherwise lost.

We also should consider when Slow Science is not beneficial. If there is a race on and priority is all, then speed is essential. Sometimes you cannot wait for the usual safety procedures of double checking and replication.This may be the case if you have to find a cure for a new highly contagious illness. In this case be prepared for many sleepless nights. Sometimes a short effortful spurt can produce results and the pain is worth it. But it is not possible to maintain such a pace. Extra effort mean extra hours, and hence exhaustion and eventually poorer productivity.

An excuse for being lazy? Idling, procrastinating, and plain old worrying can sometimes bring forth bright flashes of brilliance. Just going over data again and again can produce the satisfying artisanal feelings one might expect to find in a ceramic potter or furniture maker. Of course, the thoughts inspired by quiet down time will be lost if they are not put into effect. Since slow science is all about quality, this is never achieved by idling and taking short cuts, or over-promising. Slow science is not a way of avoiding competition and not a refuge for the ultra-critical who can’t leave well enough alone. Papers don’t need to be perfect to be published.

What about the competitive nature of science? Competition cannot be avoided in a time of restricted funding and more people chasing after fewer jobs. In competition there is a high premium on coming first. I was impressed by a clever experiment by Phillips, Hertwig, Kareev and Avrahami (2014): Rivals in the dark: How competition influences search in decisions under uncertainty [Cognition, 133(1).104-119]. These authors used a visual search task, where it mattered to spot a target as quickly as possible and indicate their decision with a button press. The twist was that players were in a competitive situation and did not know when their competitors would make their decision. If they searched carefully they might lose out because another player might get there first? If they searched only very cursorily, they might be lucky. It turned out that for optimal performance it was adaptive to search only minimally. To me this is a metaphor of the current problem of fast science.

A solution to publish or perish? There may be a way out. Game theory comes to our aid. The publish or perish culture is like the prisoner’s dilemma. You need to be slow to have more complete results and you need to be fast to make a priority claim, all at the same time. Erren, Shaw & Morfeld (2015) draw out this scenario between two scientists who can either ‘defect’ (publish early and flimsy data) or ‘cooperate’ (publish late and more complete data). suggest a possible escape. Rational prisoners would defect. And this seems to be confirmed by the command publish or perish. The authors suggest that it should be possible to allow researchers to establish priority using the equivalent of the sealed envelope, a practice used by the Paris Académie des Sciences in the 18th century. Meanwhile, prestigious institutions would need to foster rules that favour the publication of high quality rather than merely novel work. If both these conditions were met the rules of the game would change. Perhaps there is a way to improve quality through slow science.

Great post.

Slow science is hard but also rewarding. We’ve recently completed a 5 year project, and one of the publications to come out of it —here— literally represents years of work by 12 researchers, including fieldwork across the globe, countless collaborative sessions, coding design, and careful quantification.

As I shared this with Uta she noted that the duration and intense collaborative nature of the project can’t really be read off from the author contributions. This is absolutely true. The limited categories available for classifying author contributions provide little room for things like duration, gestation, interdisciplinary training, and a million other things necessary to get research like this right (especially such intangible things like arriving at fundamental research questions, building a stimulating intellectual atmosphere, fostering shared commitment to cooperation, etc.). This made me realise that in important ways, our current modes of academic publication invite us to pretend that all these preconditions are immaterial and that findings are timeless entities that just appear when you’ve got your hands on some data and methods. Hmm…

We always knew that our project was going to take a lot of time, and we have tried to optimise outcomes by providing everyone with opportunities to work on smaller collaborative or single-authored subprojects with a faster publication turnaround time while working on larger and more complex multi-authored papers. For instance, we’ve edited a special issue in which all contributors have their own articles. We also formed smaller teams to publish a number of interesting findings ahead of the bigger picture. One of those findings —our report on a possibly universal word— went on to become one of the most read papers in PLOS ONE of all time, which helped draw attention to the larger project in turn.

I guess one moral I would draw from our experience is that often you cannot afford to make an exclusive choice for slow science (especially not when PhDs and early career scholars are involved), but you can design a large project in such a way to make for a good blend of slow science and faster science.

I agree with MD, one partial way out of the quality quantity dilemma is to have a mix of studies. Some high risk, long term, most likely to pay off, but still somewhat interesting. In the best case all fitting together for efficiency.

Still bad for science that scientists need to think like this nowadays and feel unable to prioritize quality.

Competition cannot be avoided in a time of restricted funding and more people chasing after fewer jobs.

Funding and man power has always been limited. We have made science into a rat race not because of lack of resources. That has always been the case.

The culture of micro-management on numbers rather than have a basic trust that professionals to do their job is unfortunately spreading and science is swept with it.

Apropos competitive environments, Hintze et al. (2015) show in their recent article “The Janus face of Darwinian competition” [open access] that, while weak competition promotes adaptive behaviour, extreme competition leads agents to sacrifice accuracy for speed: in the fear that competitors might act first, agent do not spend sufficient time gathering evidence about the true state of the world and make rash decisions. One wonders whether the “publish-or-perish” culture in science has similar effects: to beat competitors to a grant or an interesting discovery, researchers might not perform the necessary control experiments and reach premature conclusions.